Introduction

Helm is a powerful tool and de-facto standard to manage Kubernetes applications. With time, charts become complex and you really need to test your charts to avoid regressions. There are several ways and levels to do so. Here I’ll explain what we finally do at my company with helm-unittest.

At first, we followed the documentation and wrote stuff like:

| |

We added such tests when creating a new feature or fix. This method searches for very specific items into the chart, which is nice.

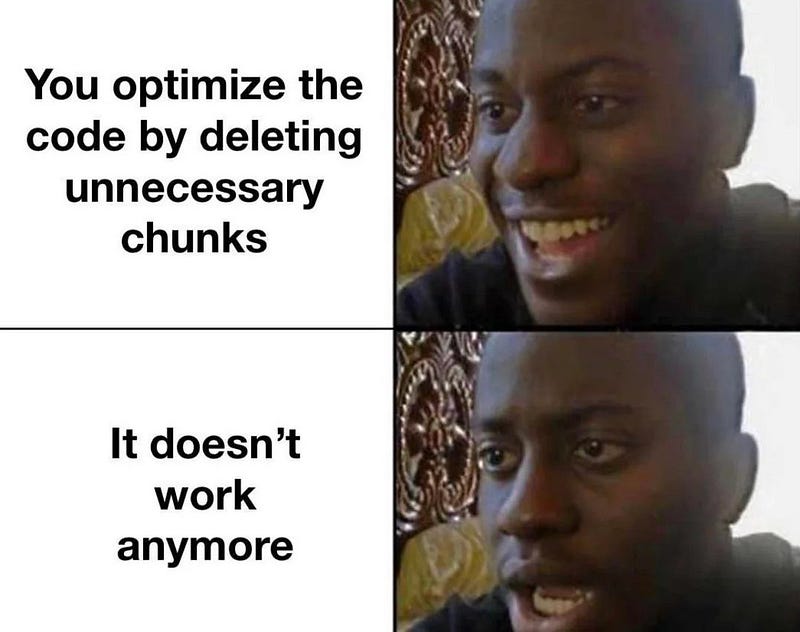

However, with time, we found out that it doesn’t scale well and more importantly, this doesn’t cover regressions well enough. This concentrates on very specific checks: what if the value (image.tag here) you set in the test is used somewhere else in the chart? You might have forgotten it after weeks/months or a new contributor might not know it. This means one can break something without knowing it and make your end-users complain.

Hopefully, helm-unittest supports snapshot testing! Just like other tools in the industry (like jest), it will compare the fully rendered chart to a snapshot taken earlier. Any difference in the rendered chart will be reported so you make sure that touching that one little thing isn’t breaking anything somewhere else in the chart.

Snapshot testing

Create chart

To make it easy, we’re going to create a basic chart:

| |

This will create a regular chart on which we’ll add our tests:

| |

Now we need to add our tests.

Tip

You can find the chart source here.

Tests structure

In order to test, we’ll add this structure to our chart:

| |

tests/snap_deployment.yaml: describes what to test. I’m following this naming:<testMethod>_<k8sObject>tests/values/: this folder will hold several Helm values so we can re-use some of them, depending on what we want to test. This presents the advantage of having stable values across tests so there are no surprises due to a typo or something.

Add tests

Info

You’ll need to first install Helm and helm-unittest plugin

Create a tests/snap_deployment.yaml:

| |

And a simple values/base.yaml:

| |

You can now execute the tests to generate a first snapshot:

| |

You should now have a tests/__snapshot__/snap_deployment.yaml.snap. This is how Helm templated your chart with the provided values.

You will need to review the manifests to make sure it’s the result you expect. If you’re happy with it, you can commit the whole thing, including the snap files.

Break tests

You’ll now update tests/values/base.yaml:

| |

and run the tests again:

| |

The replicas aren’t matching the previous snapshot so helm-unittest is not happy 😭

You now have several choices:

- fix the chart

- fix the values

- if the change is expected, update the snapshots

Values management

Earlier, we saw we can read from values files. It’s a convenient way but sometimes, it would be nice to just use that values file and set one value. It’s super easy to do:

| |

Update snapshots

You made a change and you need to update shapshots. You could update them manually but it is recommended to refresh them with the cli:

- update a single snapshot:

helm unittest -f tests/snap_deployment.yaml . -u - update them all:

helm unittest -f 'tests/*.yaml' . -u

Tip

It is advised to refresh snapshots only when they’re not known as modified by git. It will easier for you to detect changes from a clean state.

Gitlab job

I recommend to have those unit tests running on your PRs/MRs. Here’s an example for Gitlab that also reports the tests results in the MR, thanks to jUnit export format:

| |